The Black Box: Language Models

Unveiling the Inner Workings of Language Models Through Human Computation and LeetPrompt's Interactive Problem-Solving Interface.

My fascination with language models is a smaller component of a larger fascination with computation. With AI, I can respond to emails with a click, brainstorm faster than I ever could, and bounce ideas off of a chatbot. Through architectural innovation, efficiency improvements, and higher-quality datasets, the function of language models has evolved from "cool tech" to an essential tool in my daily life.

There are many issues, however, with readily accepting this brand-new type of computation into our lives. The problem I’ve been working on is what's known as the black box problem: we can't see a language model's thought process.

The Black Box

The black box problem in the context of language models arises from their complexity and opacity in decision-making processes. Language models, particularly deep learning models like neural networks, operate through intricate layers of multi-dimensional mathematical transformations that map input data to output predictions. However, these transformations are often highly abstract and nonlinear, making it difficult for humans to interpret how specific inputs can lead to particular outputs. Additionally, the sheer volume of parameters and connections within these models further obscures their inner workings. Despite efforts to visualize and interpret model behavior, the high-dimensional nature of neural networks renders complete transparency impossible. As a result, we cannot directly observe the thought processes or decision-making mechanisms of language models, leading to the term "black boxes."

This lack of clarity raises concerns about bias, accountability, and the potential for unintended consequences in decision-making processes, especially in production. Despite their remarkable capabilities, grappling with the black box problem remains a crucial challenge in ensuring responsible and ethical deployment of language models across various domains.

LeetPrompt

I started working in the RAIVN lab at the Allen School in January this year. The lab focuses on AI, Reasoning, and Vision. At first, I wanted to give research a shot, but I quickly fell in love with its continuous exploratory thinking and limitless creativity. I'm working on LeetPrompt, a game that gives us an eyehole into the black box. Let me explain how.

Luis von Ahn, Duolingo CEO, and CMU Professor, spoke about a concept called human computation at a Google TechTalk in 2006. He claimed that the next best way of harvesting data was to get humans to do it, even sometimes not consciously. His first example was the Google Captcha: users think they are doing a security check, but in addition, they are labeling images for Google. Luis von Ahn took a similar approach to Duolingo, by letting users practice language on their interface, they amassed an insane amount of text and speech translation data. He had figured out a billion-dollar way to leverage humans to augment computation collectively.

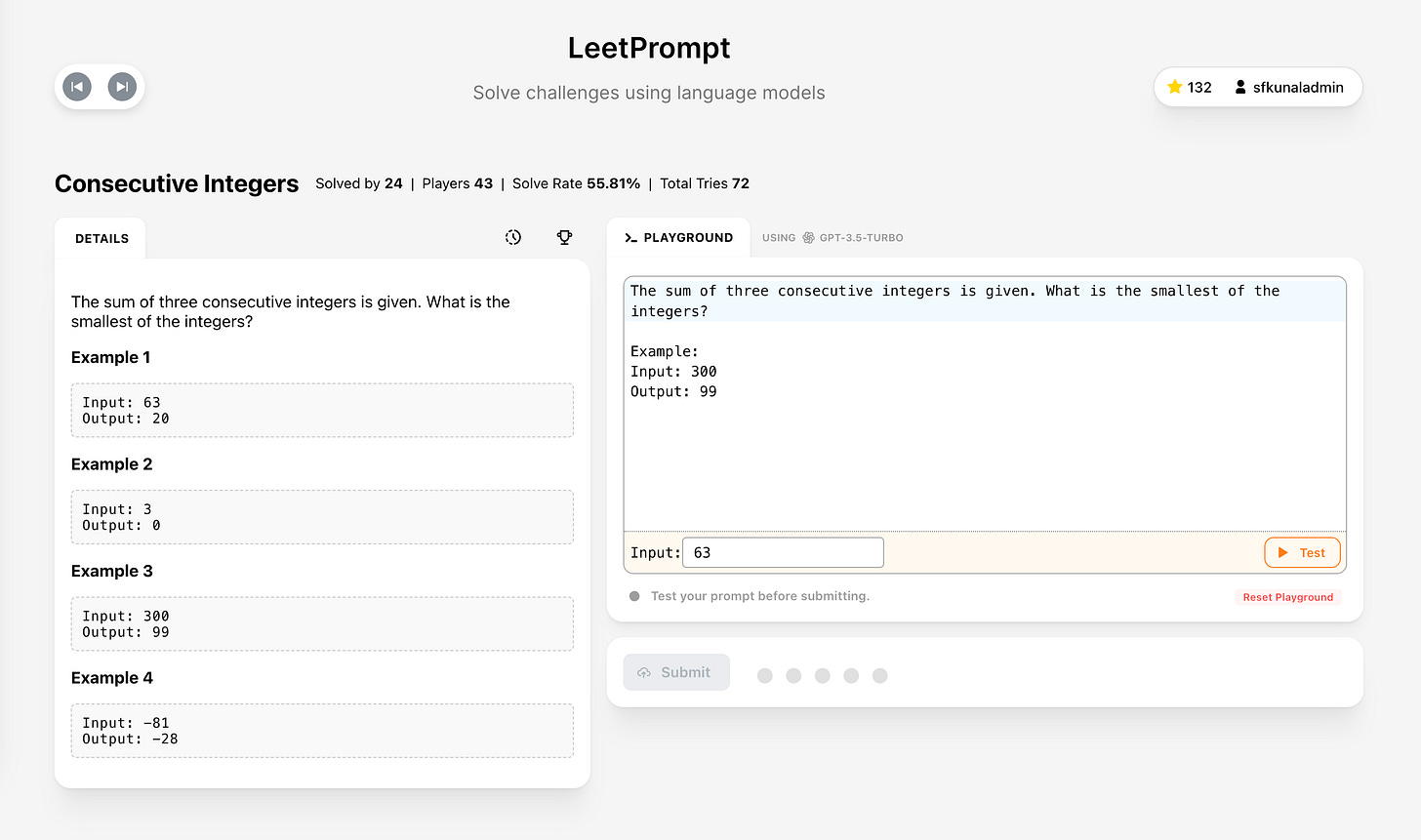

We took a similar approach to human computation with LeetPrompt, in that LeetPrompt is a game. Taking inspiration from LeetCode, LeetPrompt is a problem-solving interface where users write language model prompts to solve problems. Prompts are compared against hidden test cases and the user is awarded points for accuracy and brevity.

For example, imagine the problem is Reverse A String. Given an input string, we are to write a language model prompt that can reverse it.

Let's say Player A came up with the prompt:

"Reverse the given word"

and player B came up with the prompt:

"Reverse the given string by creating a new string and adding letters in reverse order. Think step by step."

On LeetPrompt, Player B ends up passing a lot more test cases than Player A does, meaning that Player B has a more functionally effective prompt. By running this at scale and collecting a lot of gameplay data, we have the ability to start to see associations in the data. For example, telling the model to "think step-by-step" is extremely effective for computationally intense problems. This is a valuable insight into a specific language model's black box. We've published LeetPrompt to run publicly and are in the process of analyzing many studies.

On LeetPrompt, users craft language model prompts aimed at solving a diverse array of problems spanning multiple problem domains. The platform's interactive nature encourages users to experiment with different instruction strategies, thereby facilitating a deeper understanding of LLM capabilities and limitations. As users engage with LeetPrompt, their interactions are automatically evaluated, yielding insights into the effectiveness of various prompt designs and the underlying dynamics of human-LLM interactions.

One of the key contributions of LeetPrompt lies in its ability to shed light on the black-box nature of language models. By analyzing the prompts generated by users and their corresponding success rates in problem-solving, researchers can glean valuable insights into the inner workings of LLMs. In addition, the platform's data-driven approach enables the identification of patterns and correlations that may inform the design of more effective prompts and enhance the overall performance of language models. In essence, LeetPrompt represents a novel approach to tackling the interpretability challenge inherent in evaluating LLMs, offering a glimpse into the intricate interplay between human cognition and computational processes.

How I Built It

As the resident lead for LeetPrompt, I had technical oversight in writing the codebase (React + NextJS + MantineUI + Django + OpenAI). My advisor helped a ton with the UX, while I was responsible for the frontend and backend. We utilized user studies to continuously iterate on the site's design until we landed on something that felt reasonably intuitive and fun. We also collaborated with researchers across the country alongside industry-standard benchmarking datasets to create strong and testable questions. Right now, we're in the process of exploring our data and getting ready to start authoring our paper.

Conclusion

Collectively, through endeavors like LeetPrompt, the AI community has embarked on a quest to understand the inner workings of language models by harnessing the power of human computation. This effort not only offers valuable insights into the limitations and capabilities of language models but also paves the way for more transparent and accountable AI systems in the future. As we continue to iterate, collaborate, and explore, the path ahead promises new discoveries and innovations in the realm of computational linguistics. Together, we are taking strides towards a future where understanding and harnessing the power of language models is not just a possibility but a necessity for responsible AI deployment.